Analysis of Merge Sort

First let us consider the running time of the procedure Merge (A, p, q, r). Let n = r - p + 1 denote the total length of both the left and right sub-arrays. What is the running time of Merge as a function of n? The algorithm contains four loops (none nested in the other). It is easy to see that each loop can be executed at most n times. (If you are a bit more careful you can actually see that all the while-loops together can only be executed n times in total, because each execution copies one new element to the array B, and B only has space for n elements.) Thus the running time to Merge n items is Q (n). Let us write this without the asymptotic notation, simply as n. (We’ll see later why we do this.)

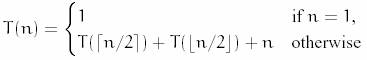

Now, how do we describe the running time of the entire MergeSort algorithm? We will do this through the use of a recurrence, that is, a function that is defined recursively in terms of itself. To avoid circularity, the recurrence for a given value of n is defined in terms of values that are strictly smaller than n. Finally, a recurrence has some basis values (e.g. for n = 1), which are defined explicitly.

Let T (n) denote the worst case running time of MergeSort on an array of length n. If we call MergeSort with an array containing a single item (n = 1) then the running time is constant. We can just write T (n) = 1, ignoring all constants. For n > 1, MergeSort splits into two halves, sorts the two and then merges them together. The left half is of size dn/2e and the right half is ën/2û. How long does it take to sort elements in sub array of size én/2ù? We do not know this but because ën/2û < n for n > 1, we can express this as T ( én/2ù ). Similarly the time taken to sort right sub array is expressed as T ( ën/2û ). In conclusion we have

This is called recurrence relation, i.e., a recursively defined function. Divide-and-conquer is an important design technique, and it naturally gives rise to recursive algorithms. It is thus important to develop mathematical techniques for solving recurrences, either exactly or asymptotically.

Let’s expand the terms.

T(1) = 1

T(2) = T(1) + T(1) + 2 = 1 + 1 + 2 = 4

T(3) = T(2) + T(1) + 3 = 4 + 1 + 3 = 8

T(4) = T(2) + T(2) + 4 = 8 + 8 + 4 = 12

T(5) = T(3) + T(2) + 5 = 8 + 4 + 5 = 17

. . .

T(8) = T(4) + T(4) + 8 = 12 + 12 + 8 = 32

. . .

T(16) = T(8) + T(8) + 16 = 32 + 32 + 16 = 80

. . .

T(32) = T(16) + T(16) + 32 = 80 + 80 + 32 = 192

What is the pattern here? Let’s consider the ratios T (n)/n for powers of 2:

T(1)/1 = 1 T(8)/8 = 4

T(2)/2 = 2 T(16)/16 = 5

T(4)/4 = 3 T(32)/32 = 6

This suggests T (n)/n = log n + 1 Or, T (n) = n log n + n which is Q (n log n) (using the limit rule).

Previous

TOC